All Categories

Featured

Table of Contents

Amazon currently generally asks interviewees to code in an online paper documents. Currently that you recognize what concerns to anticipate, allow's focus on exactly how to prepare.

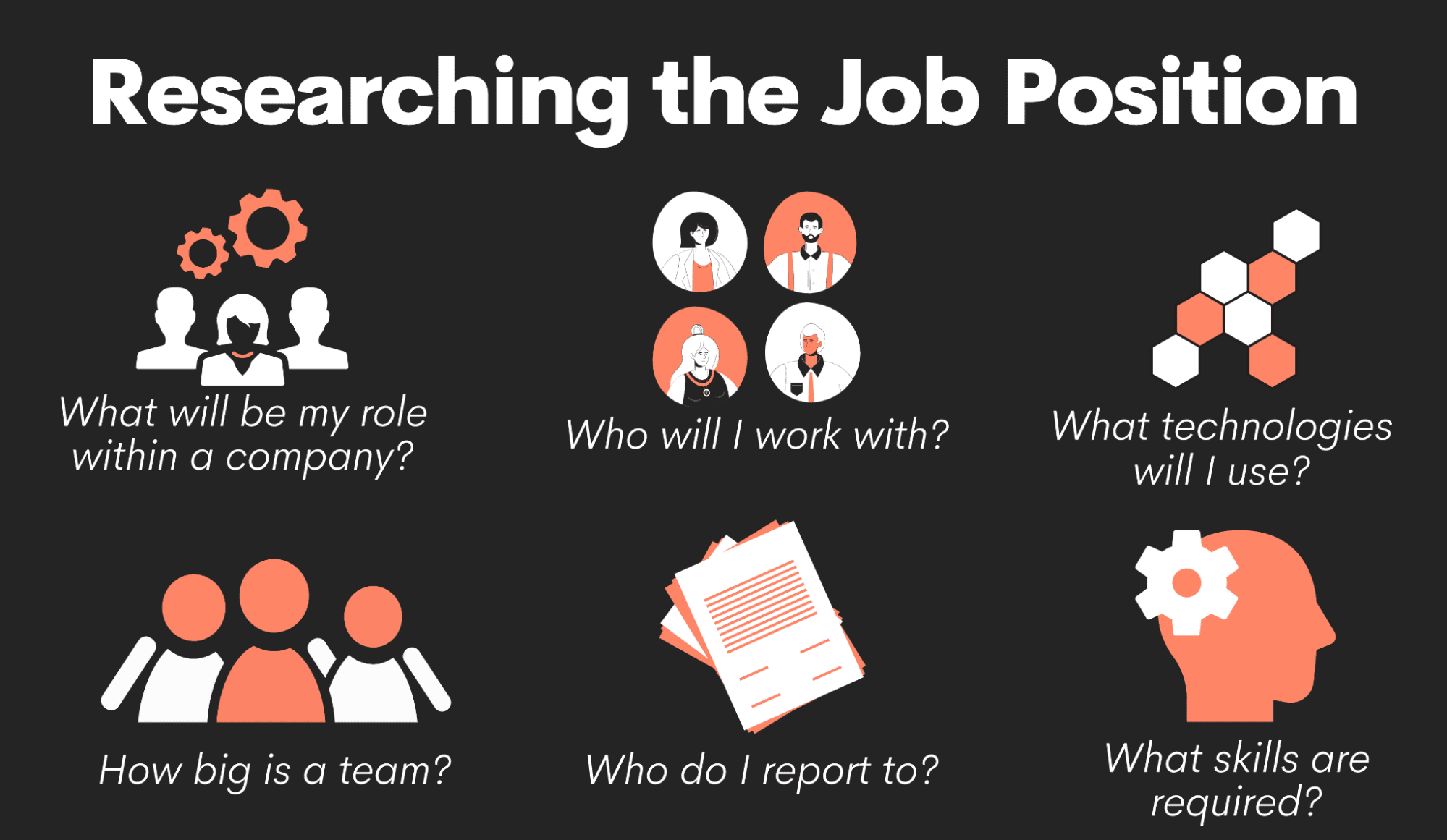

Below is our four-step preparation strategy for Amazon information researcher candidates. Prior to spending tens of hours preparing for a meeting at Amazon, you must take some time to make sure it's actually the appropriate firm for you.

, which, although it's created around software application development, must give you an idea of what they're looking out for.

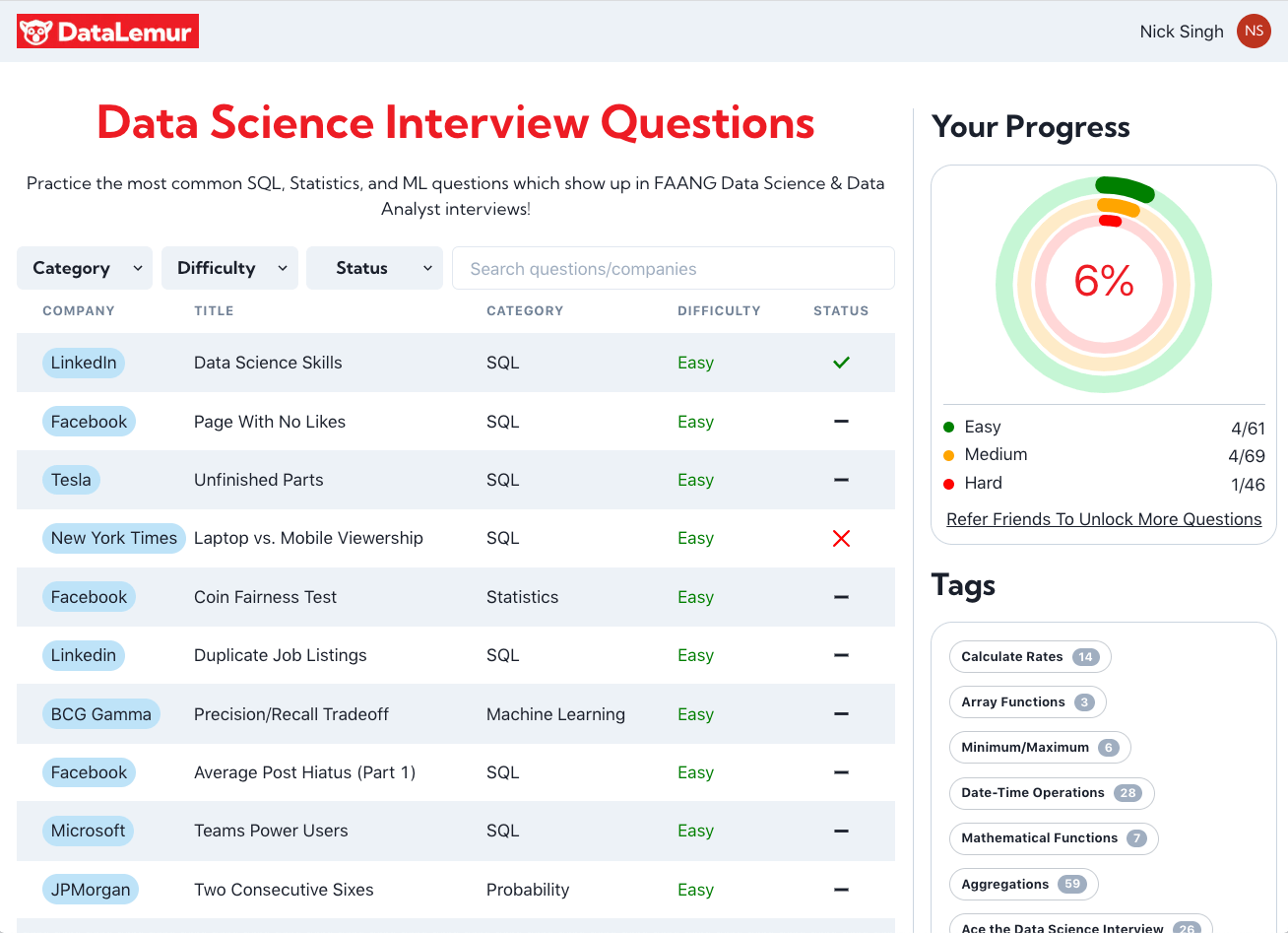

Keep in mind that in the onsite rounds you'll likely have to code on a white boards without having the ability to implement it, so practice creating via issues on paper. For maker knowing and data concerns, supplies on-line courses developed around statistical possibility and other helpful subjects, a few of which are totally free. Kaggle also offers complimentary training courses around initial and intermediate artificial intelligence, along with information cleansing, data visualization, SQL, and others.

Mock System Design For Advanced Data Science Interviews

Make sure you have at least one story or example for each and every of the principles, from a variety of positions and projects. Finally, a terrific means to practice all of these various sorts of inquiries is to interview on your own out loud. This might sound weird, but it will significantly enhance the method you communicate your solutions throughout a meeting.

Trust us, it functions. Practicing on your own will only take you up until now. Among the primary difficulties of data scientist meetings at Amazon is connecting your different answers in a way that's very easy to recognize. Because of this, we highly suggest practicing with a peer interviewing you. When possible, an excellent place to begin is to experiment close friends.

Nevertheless, be alerted, as you might come up against the complying with problems It's tough to know if the responses you obtain is precise. They're unlikely to have insider expertise of meetings at your target company. On peer platforms, people typically waste your time by disappointing up. For these reasons, numerous prospects avoid peer mock interviews and go right to mock interviews with a specialist.

Creating Mock Scenarios For Data Science Interview Success

That's an ROI of 100x!.

Commonly, Data Science would concentrate on mathematics, computer science and domain experience. While I will quickly cover some computer science fundamentals, the bulk of this blog will mainly cover the mathematical basics one could either need to clean up on (or even take an entire program).

While I comprehend many of you reading this are much more math heavy by nature, understand the bulk of information science (risk I claim 80%+) is accumulating, cleaning and processing information into a beneficial form. Python and R are one of the most preferred ones in the Information Science area. I have additionally come throughout C/C++, Java and Scala.

Top Challenges For Data Science Beginners In Interviews

It is typical to see the bulk of the information scientists being in one of two camps: Mathematicians and Data Source Architects. If you are the second one, the blog site will not assist you much (YOU ARE ALREADY AWESOME!).

This may either be accumulating sensing unit information, parsing web sites or accomplishing studies. After collecting the data, it requires to be transformed right into a usable form (e.g. key-value store in JSON Lines documents). When the information is collected and placed in a useful layout, it is necessary to do some data high quality checks.

End-to-end Data Pipelines For Interview Success

In instances of fraud, it is very usual to have heavy class discrepancy (e.g. only 2% of the dataset is real fraudulence). Such details is essential to select the appropriate selections for function engineering, modelling and model evaluation. For additional information, examine my blog site on Fraudulence Detection Under Extreme Class Discrepancy.

In bivariate evaluation, each feature is compared to other functions in the dataset. Scatter matrices allow us to discover surprise patterns such as- attributes that should be crafted with each other- functions that may require to be eliminated to avoid multicolinearityMulticollinearity is really an issue for numerous models like direct regression and therefore needs to be taken treatment of as necessary.

Imagine using web usage data. You will certainly have YouTube users going as high as Giga Bytes while Facebook Messenger users make use of a couple of Huge Bytes.

One more concern is the usage of specific worths. While specific worths are typical in the data scientific research world, realize computer systems can only understand numbers. In order for the specific worths to make mathematical feeling, it requires to be changed into something numerical. Usually for specific values, it is common to do a One Hot Encoding.

Advanced Coding Platforms For Data Science Interviews

At times, having a lot of thin measurements will certainly hinder the efficiency of the model. For such scenarios (as generally performed in image recognition), dimensionality reduction algorithms are utilized. An algorithm commonly used for dimensionality reduction is Principal Elements Evaluation or PCA. Discover the auto mechanics of PCA as it is likewise one of those topics among!!! For additional information, look into Michael Galarnyk's blog on PCA making use of Python.

The typical groups and their below categories are clarified in this area. Filter methods are usually utilized as a preprocessing action. The option of features is independent of any kind of maker finding out algorithms. Rather, functions are selected on the basis of their scores in various statistical examinations for their relationship with the end result variable.

Usual techniques under this group are Pearson's Relationship, Linear Discriminant Evaluation, ANOVA and Chi-Square. In wrapper approaches, we try to use a subset of features and train a model using them. Based on the inferences that we attract from the previous design, we determine to add or remove attributes from your part.

Data Engineering Bootcamp Highlights

These approaches are normally computationally extremely pricey. Usual techniques under this category are Ahead Option, Backward Removal and Recursive Feature Removal. Embedded approaches combine the top qualities' of filter and wrapper methods. It's executed by algorithms that have their own integrated attribute choice approaches. LASSO and RIDGE prevail ones. The regularizations are provided in the equations listed below as referral: Lasso: Ridge: That being said, it is to comprehend the technicians behind LASSO and RIDGE for interviews.

Without supervision Understanding is when the tags are inaccessible. That being said,!!! This blunder is enough for the job interviewer to terminate the meeting. One more noob blunder people make is not stabilizing the features before running the version.

Linear and Logistic Regression are the a lot of standard and commonly utilized Machine Discovering formulas out there. Prior to doing any kind of evaluation One usual meeting slip individuals make is beginning their analysis with a more complex design like Neural Network. Benchmarks are vital.

Table of Contents

Latest Posts

How To Pass System Design Interviews At Faang Companies

Netflix Software Engineer Hiring Process – Interview Prep Tips

The Easy Way To Prepare For Software Engineering Interviews – A Beginner’s Guide

More

Latest Posts

How To Pass System Design Interviews At Faang Companies

Netflix Software Engineer Hiring Process – Interview Prep Tips

The Easy Way To Prepare For Software Engineering Interviews – A Beginner’s Guide